AI Agents: Hype or Reality?

AI Agents: Hype or Reality?

A few years ago, it was all about Blockchain; before that, IoT, then Big Data, and even earlier, the Cloud. Each era brought a paradigm shift of sorts, drawing huge investments and promises. Some delivered, some didn’t, but they each brought advancement in tech.

Today, we find ourselves fully embracing the AI hype cycle that started circa 2022 with OpenAI. This feels different―not because the marketing is better (we could debate both the “Open” and “AI” in OpenAI)—but because it’s been delivering real-world applications with unheard-of adoption. It’s not a future promise; it’s happening now. And it’s happening fast! How fast? Well, it’s 2025, and it seems like everyone is jumping on the next big thing: AI Agents.

OpenAI announced Operator, an AI agent that can use its own browser to perform tasks for you. Some companies even got ahead of the curve and shipped agents as early as 2024—like Chatbase. But what exactly is an AI agent? Let me break it down as if it’s your first time hearing about it. I’d say I’ll explain it like I would to a 14-year-old, but let’s be honest—they probably know more about AI agents than we do these days.

Let’s dive in!

What is an AI Agent?

Imagine your company just rolled out a stealth enterprise upgrade—no IT tickets, no downtime, no user manual. It’s the behind-the-scenes team you never see: resolving customer tickets, writing code, translating documents, or drafting marketing copy, all at once. The catch? It costs $200 a month, works 24/7, and turns “urgent” requests into “done” in minutes. No meetings, no onboarding. Like giving your workflow a turbo button nobody saw coming. That’s the promise of AI agents—and no matter how you look at it, it’s enticing. So, how do these work?

AI agents are a system designed to augment a large language model (LLM) by planning tasks, integrating with third-party services, and chaining prompts to refine answers.

What’s an LLM, you ask? An LLM or Large Language Model is a deep learning model trained on vast amounts of text to understand and generate human-like language. It predicts the next word in a sequence, enabling it to answer questions, write content, translate languages, or brainstorm ideas—all by recognizing patterns in its training data.

In a nutshell, they can take a relatively simple prompt and turn it into a mix of prompts and API calls. Of course, that will end up being more expensive vs running a single prompt (we’ll touch upon that later). Without turning this into a 5-layer wedding cake, there are two high level categories for AI agents, based on the workflows “they” can follow:

Prescriptive Workflows

Exploratory Workflows

Let's break these down.

Prescriptive Workflows: Results in One Response

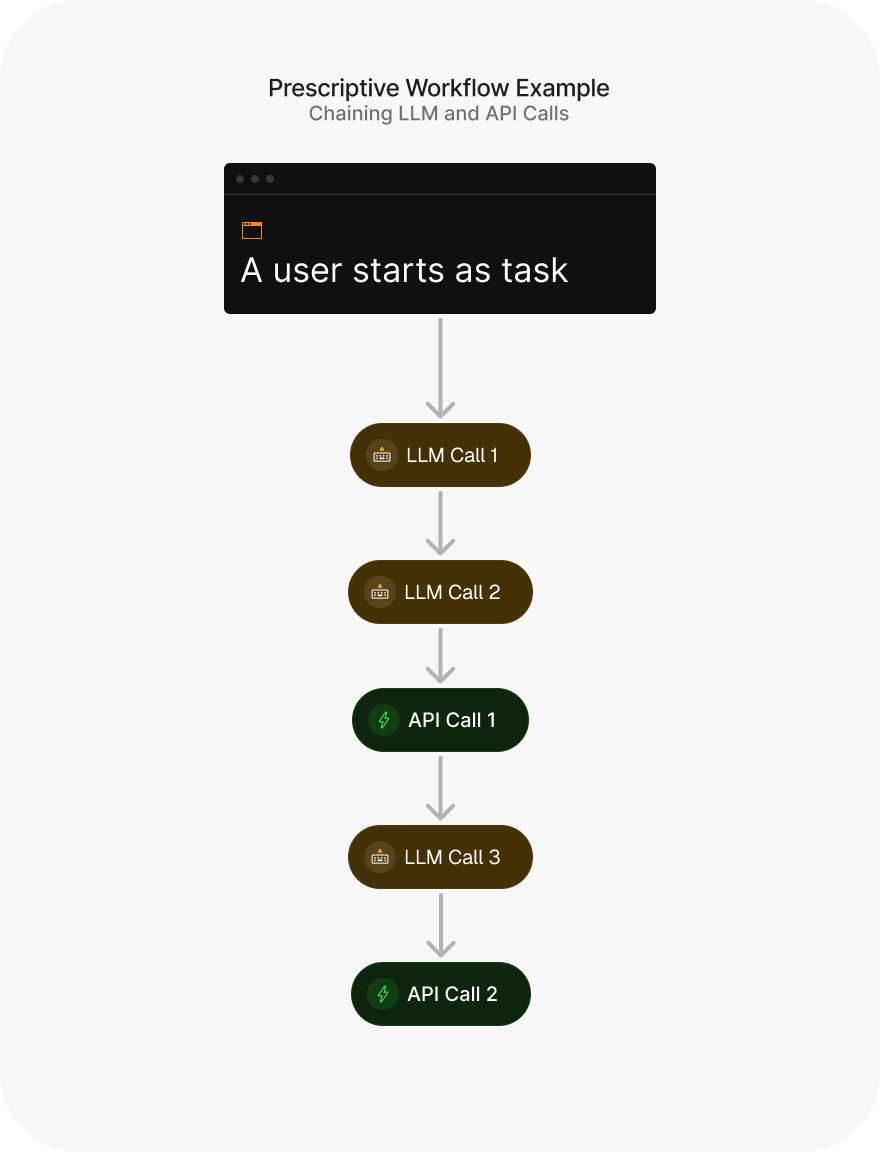

A rather simplistic view is: an AI agent is a series of “regular” prompts chained together. While not magical in itself, this approach allows for various practical implementations.

To that end, a simple implementation of an AI agent can be a for loop that makes a number of LLM calls to your favorite AI model, optionally followed or preceded by a sequence of third-party API calls (or integrations, if you like).

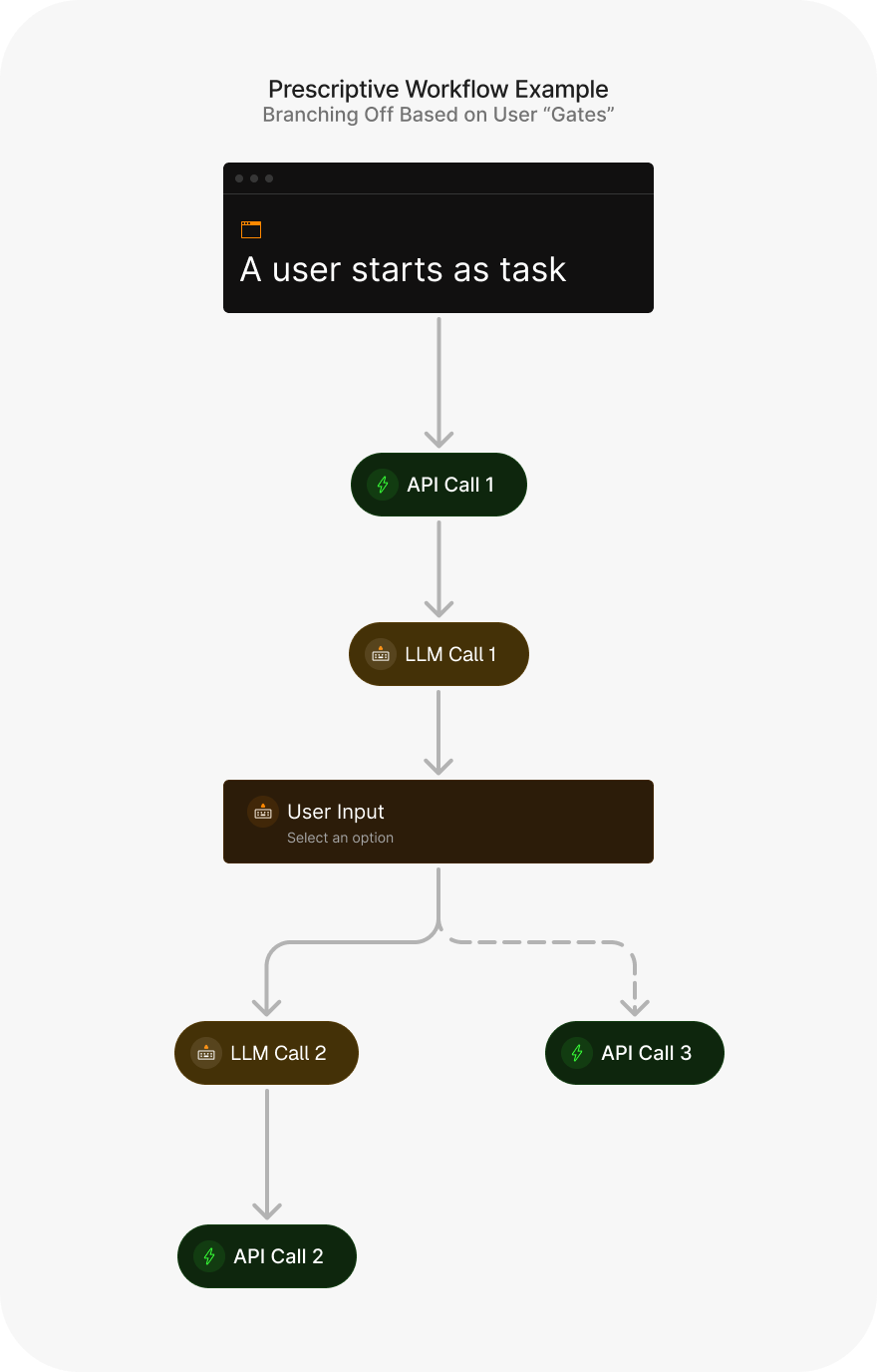

Some of the API calls can also be to other 3rd party APIs, sometimes determined by user “gates” ― essentially pausing a run until input is sent.

This is particularly useful for overcoming inherent LLM limitations, such as accessing real-time data or data siloed in other systems.

For example, consider an AI agent processes an employee's holiday request by calling a siloed HR database via an internal API to check remaining leave balances, then auto-approves the request if criteria are met.

Some other examples could be:

Customer Support FAQ Agent: chains 4 prompts to identify a query's intent, retrieves relevant information from an internal knowledge base, and formats it into a user-friendly response.

Content Creation Workflow: chains 3 prompts for a blog post by generating an outline, expands it into sections, and polishes the final draft to have a certain writing style.

Meeting Scheduler: chains an API call, a prompt, then another API call, checks a person’s private calendar for availability, suggests / schedules a meeting at an optimal time, then sends it to a Slack channel.

Prescriptive workflows also allow tasks to be broken into smaller micro-tasks handled in parallel before aggregating results into a single response. This approach can be quite performant and versatile.

On top of this, each micro-task could make an API call on its own to retrieve real-time or siloed data.

In other words, these workflows take features of an LLM that we know and love (contextual understanding, task-agnostic versatility, adaptive reasoning, human-like interaction etc.), and enhance them with capabilities that are otherwise hard if not impossible to achieve.

The good thing about prescriptive workflows is that costs remain predictable—higher than a single LLM call, but stable if variables like API usage and task complexity stay consistent.

However, you can see that scaling these up to a larger number of integrations can become difficult and inefficient. Sometimes not all API calls are needed; other times dynamic user input is required to complete the task.

What if an Agent could decide this for itself?

Exploratory Workflows: Adaptable & Dynamic

You might have read the above and concluded:

“AI agents are 3 LLM calls in a Trench Coat” and moved on with your day.

However, what if I told you that it can sometimes be a winter jacket, finance vest or even an ugly Xmas sweater? You see, not all AI agents are created equally. While the foundation of many agents might resemble chained prompts, they can also have the ability to plan, reason, iterate and self-direct workflows.

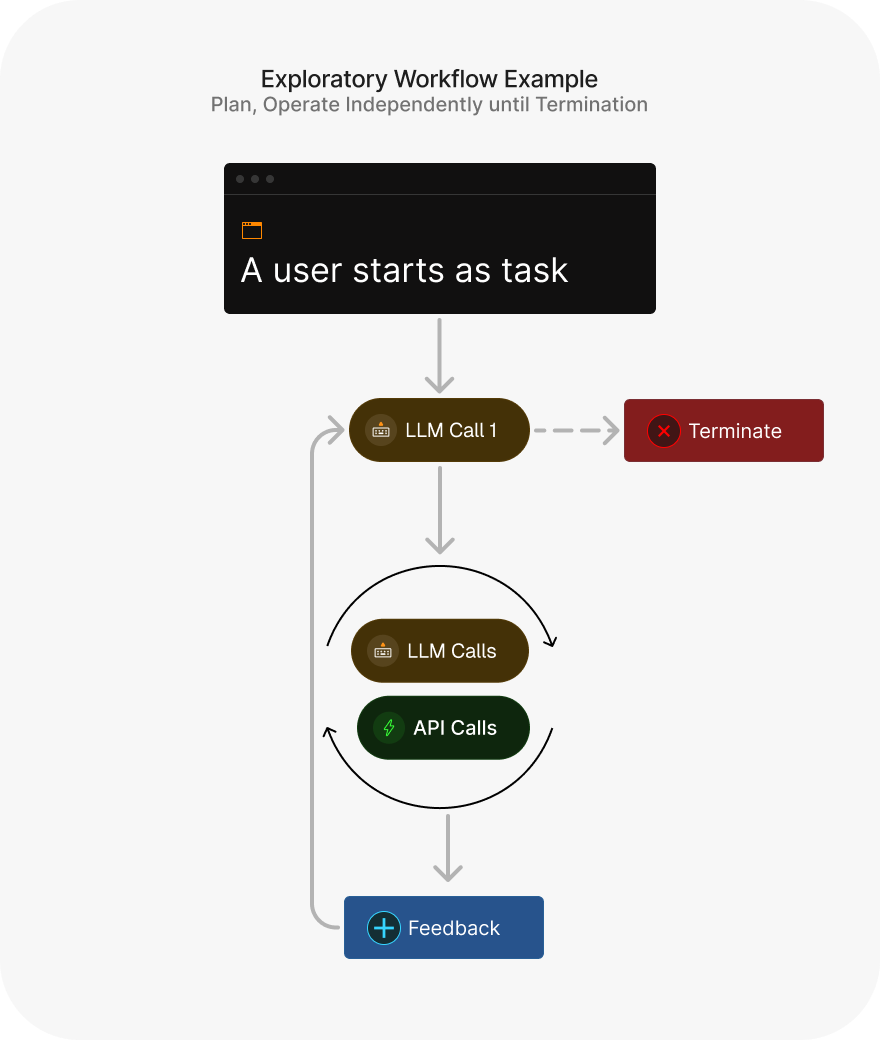

Exploratory workflows are dynamic and adaptable. They can take all possible paths of Prescriptive Workflows, only they are not predefined― instead they can plan, execute and adapt based on machine (API/integration) or human feedback. Deep down they are still a collection of prompting techniques, loops and API calls. Sprinkle some user input and you have a system that was not possible to build just a few years ago.

These types of workflows can then be more general-purpose and scale to any number of tools and API calls, creating perhaps 1000s of individual, dynamic workflows. This is still an enhancement technique, but with a lot more tricks:

Dynamic Planning: The agent decides which tools to use from a centralized registry.

Tool Integration: It integrates with CRM systems or APIs using context like OpenAPI specifications.

Secure Access: Agents can securely access private data using protocols like OAuth.

Iterative Reasoning: They evaluate results and adjust plans until success or failure criteria are met.

Feedback Loops: Agents refine their performance through reinforcement learning or human feedback.

For example, imagine an AI agent tasked with searching across your cloud storage and emails. It would dynamically plan execution steps—calling APIs for Dropbox or Gmail as needed—and iterate based on results until the task is complete. The app itself might just be an input where a user can prompt a search perhaps specifying a service like 'Dropbox' or more generally requesting to 'search across emails and calendars’:

The AI agent would then plan execution steps based on this initial prompt. It might decide to call one or more APIs, it might have to deal with pagination, sorting and could end up going through multiple feedback loops. The key part here is for a “Supervisor” prompt that has the “ability” to terminate the process with either a success or an error.

One downside of these workflows is that it’s harder to predict the cost, since they can decide what “paths” to take within the feedback loop. So in general, you’d set it up to have a hard stop on a number of iterations, just so it doesn’t end up in an endless loop consuming the IT budget for the entire month. Extrapolating this, the possibilities are endless, aren’t they? And therefore the hype seems endless. So maybe we should just replace everything with AI Agents then? Well… it depends.

Do You Need an Exploratory AI Agent?

To decide whether you need an exploratory AI agent or a simpler prescriptive workflow, consider this analogy: Do you need a Ferrari or a Honda to carry your bananas from the grocery store? In most cases, the Honda would do the job just fine, while the Ferrari might use 5x more fuel, make more noise and oops! Turns out I don’t know how to drive the Ferrari and I’ve put it in a ditch (it’s cold where I live).

You see where this is going. In most cases, prescriptive workflows are sufficient. Most tasks can be then completed at a fraction of the cost, with more speed and possibly with more control over the internals. Here’s when you should—and shouldn’t—consider using agents:

Choose Prescriptive Workflows If:

Your task is straightforward and predictable.

You care about speed and low costs.

A single LLM call can get the job done.

You like boring but stable systems.

Choose Exploratory Workflows If:

Your task is open-ended and unpredictable.

You need flexibility and dynamic decision-making.

You’re okay with investing time and money in testing and error-proofing.

You like exciting and/or unpredictable systems that sometimes order an Apple accessory instead of actual fruit.

If you’re a fan of spreadsheets (I won’t judge), here’s a different way to visualize it:

Prescriptive Workflows | Exploratory Workflows | |

|---|---|---|

Complexity | Low—follows predefined steps | High—makes decisions dynamically |

Flexibility | Limited | High |

Cost | Lower | Higher |

Reliability | Predictable | Can be error-prone |

Performance | Normal | Slower |

Debuggability | Normal | Limited |

Scalability (feature-wise) | Low | High |

At the end of the day, it is all about choosing the right tool for the job. If an AI Agent is your hammer, you’ll easily be able to find nails, but performance and cost might turn a great idea into a flop. What excites me personally are Prescriptive Workflows. They are cheap, fast and can enhance an already valuable system in a predictable way, whereas the Exploratory ones are more of a gamble.

Implementing Your Very Own AI Agent

If you followed along, you now understand that there isn’t anything magical about AI Agents. Not more than AI itself anyway. So implementing one from scratch is perfectly reasonable. There are plenty of tools out there that promise to make building agents easier:

They’re great and pack all sorts of clever tricks but beware: they can add unnecessary complexity and make debugging and deployment harder. Start simple. Use LLM APIs directly before diving into fancy frameworks. You’d be surprised how much you can achieve with just a few lines of code.

Final Thoughts

Like all hype cycles, we need to be careful not to get too excited about the future promise and focus on what is possible in the short term. You’ve probably seen headlines about how they’re going to revolutionize everything, from coding to customer service. But are they really a silver bullet? Not quite. But that doesn’t mean they won’t change the world either, just not overnight.

The challenge lies in balancing their cost & performance with their flexibility. Many simpler tasks can be solved more efficiently today with “regular AI”. With the rapid advancement in performance and price decreases that we’ve been seeing, who’s to say this won’t be the default way we use AI tomorrow?