How Sentry's AI Autofix Changed my Mind About AI Assistants

Dan Mindru - Last Updated:

Note: Since this blog was written, AI Autofix is now Seer.

Blockchain, IoT, Big Data. If you’ve been around in tech for a while, you know that these kinds of buzzwords come and go: they make a splash going in and fizzle out over time.

Seeing many of them come and go over the years has made me skeptical. What are they trying to sell us this time? Some might call it getting grumpy; others might call it becoming an enterprise architect. So you’ll have to forgive me for thinking AI assistants seemed like just another buzzword. After all, they are just 5 API calls wrapped in a gift box. Oh boy, was I wrong.

What’s in an AI Assistant Anyway?!

In layman's terms, AI assistants are like having a capable teammate who can handle everything from sales to scheduling meetings to writing copy, all for a fraction of the cost and in record time. Assistants are built by chaining together multiple prompts or API calls, authentication, user input and can plan a workflow themselves. While they’re not perfect (or cheap), AI assistants are already reshaping how we work, and with the overall cost of AI going down, they might just become the go-to solution when implementing AI-powered features.

Looking to dive deeper? Check out this AI agent post and learn how to use agents in your own apps!

The Bug That Started It All

I downplayed AI assistants for a while because they weren’t that “technically advanced” to me. It’s “just AI” with some sugar on top. But as I learned from my years of indie hacking, it is not the advanced tech that makes a product, but the problem it solves. Make no mistake, AI assistants are a powerful tool. It’s only that with great power comes great responsibility. They might not be the solution to all problems, and might not be the fastest at that ― but they sure do have their use cases.

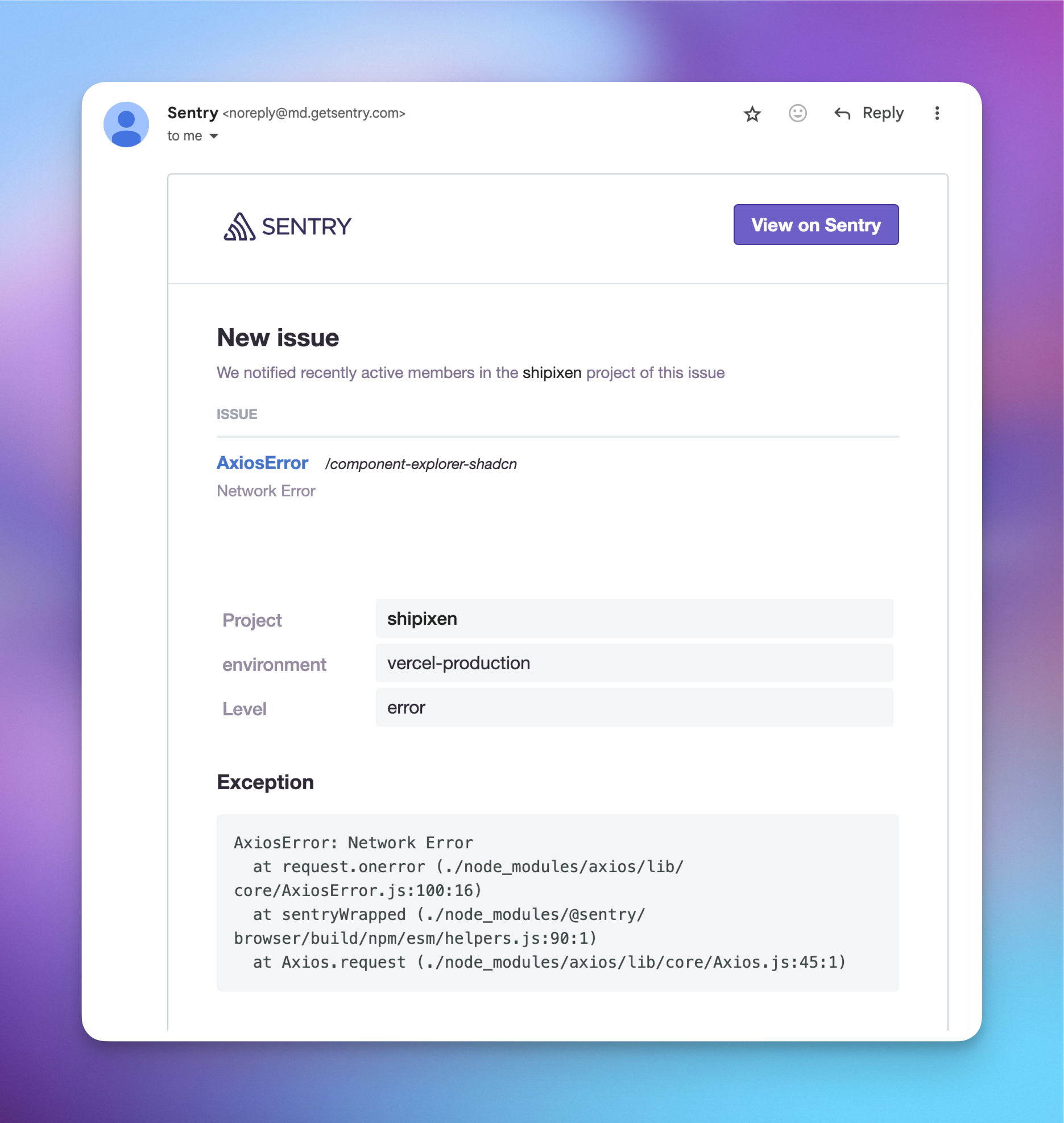

One of the reasons why I personally did not believe in them was because I didn’t see these use cases. Well friends, today I will expand your horizons with a feature I discovered in my favorite monitoring platform. This feature is none other than Sentry’s AI Autofix (currently in beta, I believe, but soon to be generally available). It all started with this one email, like most good stories do. If you also don’t write bugs like I do, this might take you by surprise.

Being fairly certain this must be a mistake, I clicked on “View on Sentry” to clear things up. You won’t believe what happened next…

My Intro To The First AI Assistant that Blew My Mind: AI Autofix

Upon opening the issue, it quickly became apparent that I might have overestimated my capabilities. “Must just be the exception that proves the rule,” I said to myself.

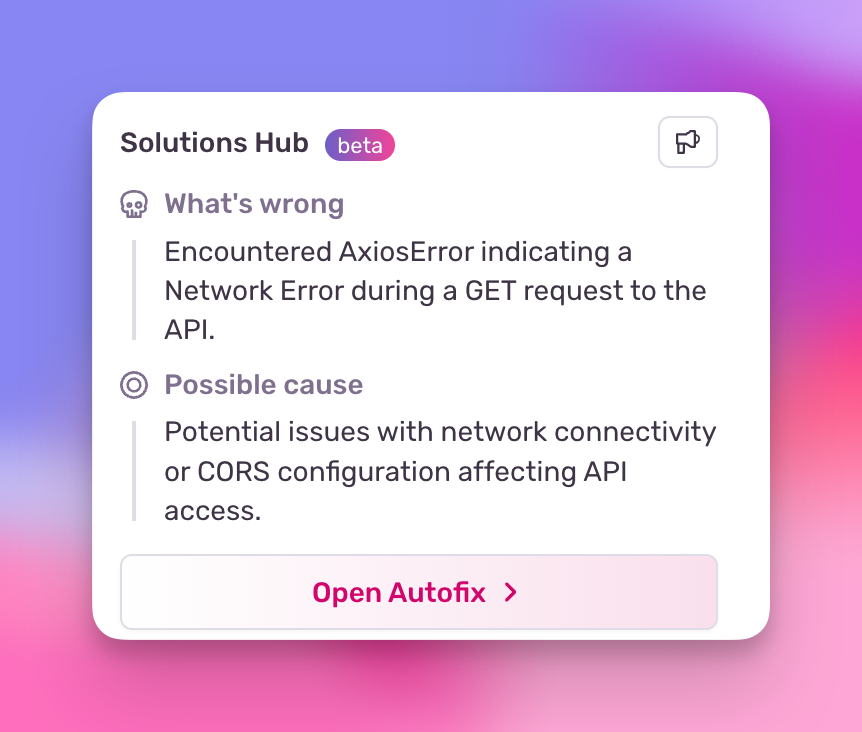

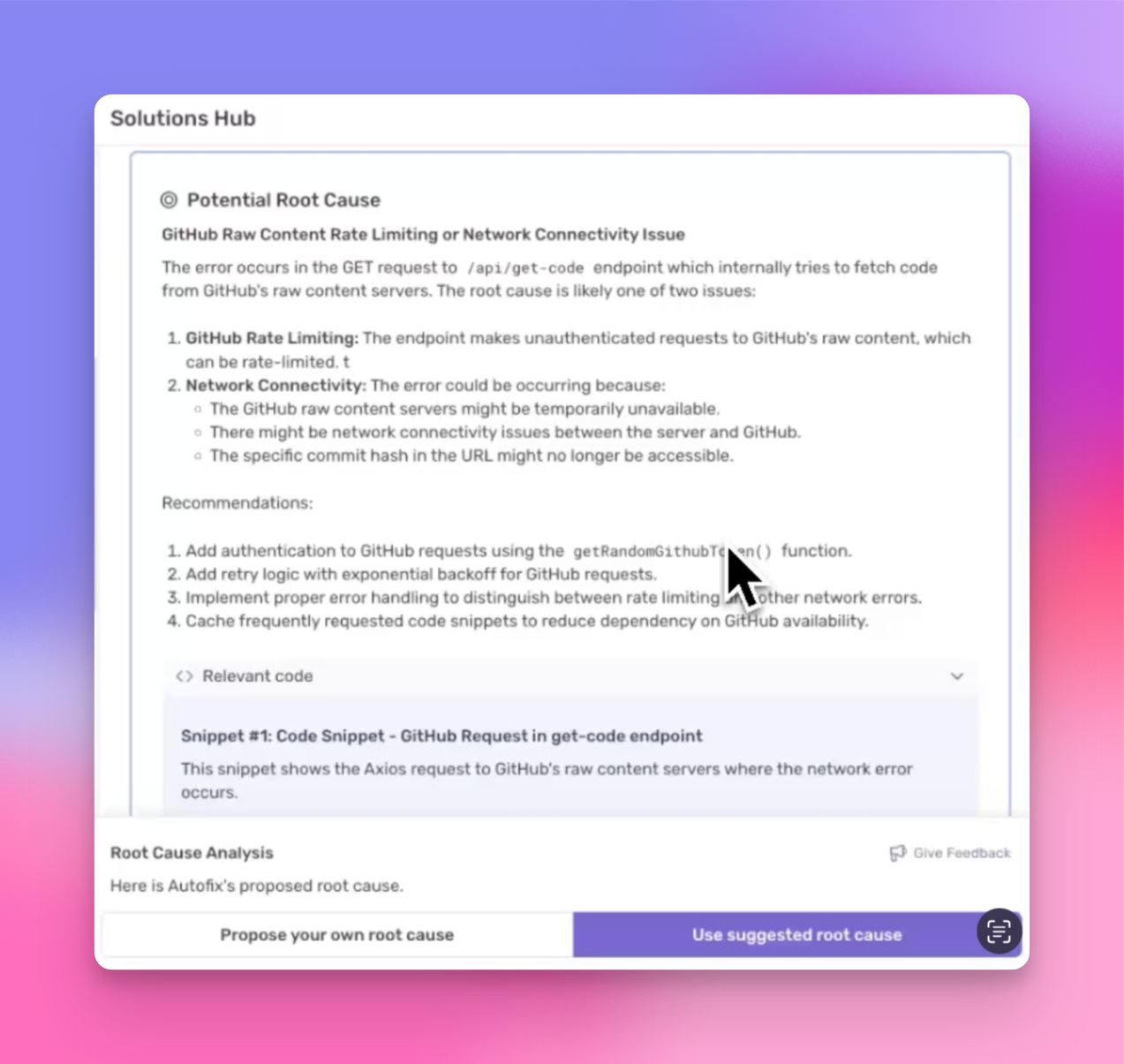

One thing did catch my eye in the top right corner of the issue view tough: the solutions hub. The cause was obviously… wrong, and to that I thought: “Oh great, Sentry jumped on the AI bandwagon and I am about to be in for a major disappointment today”.

OK. Jokes aside, this looked to me like a one-shot prompt. What intrigued me was the “Open Autofix” button. Obviously, Sentry has a ton of context for this problem: it has the code base, source maps, the exception details as well as a wealth of anonymous user properties. For the same reason why Sentry is useful for a human, it can be useful to AI. So as soon as I press that button, I knew this particular feature was not just a gimmick:

Initial Analysis and Self-Correction

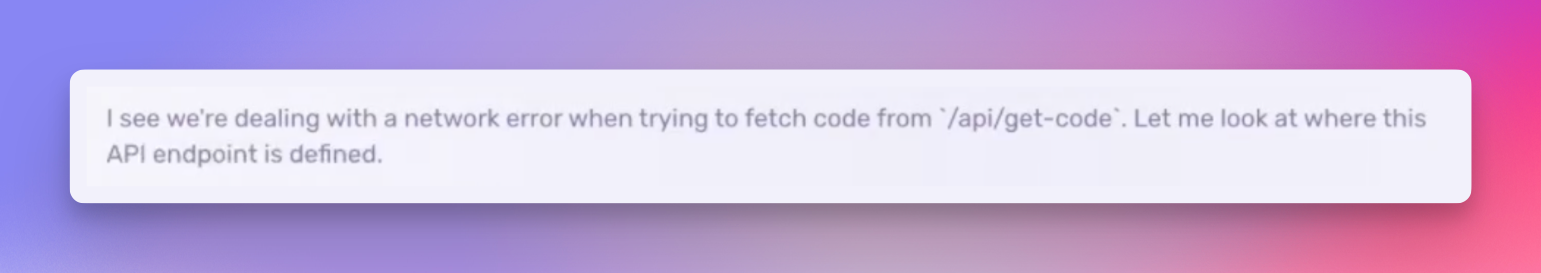

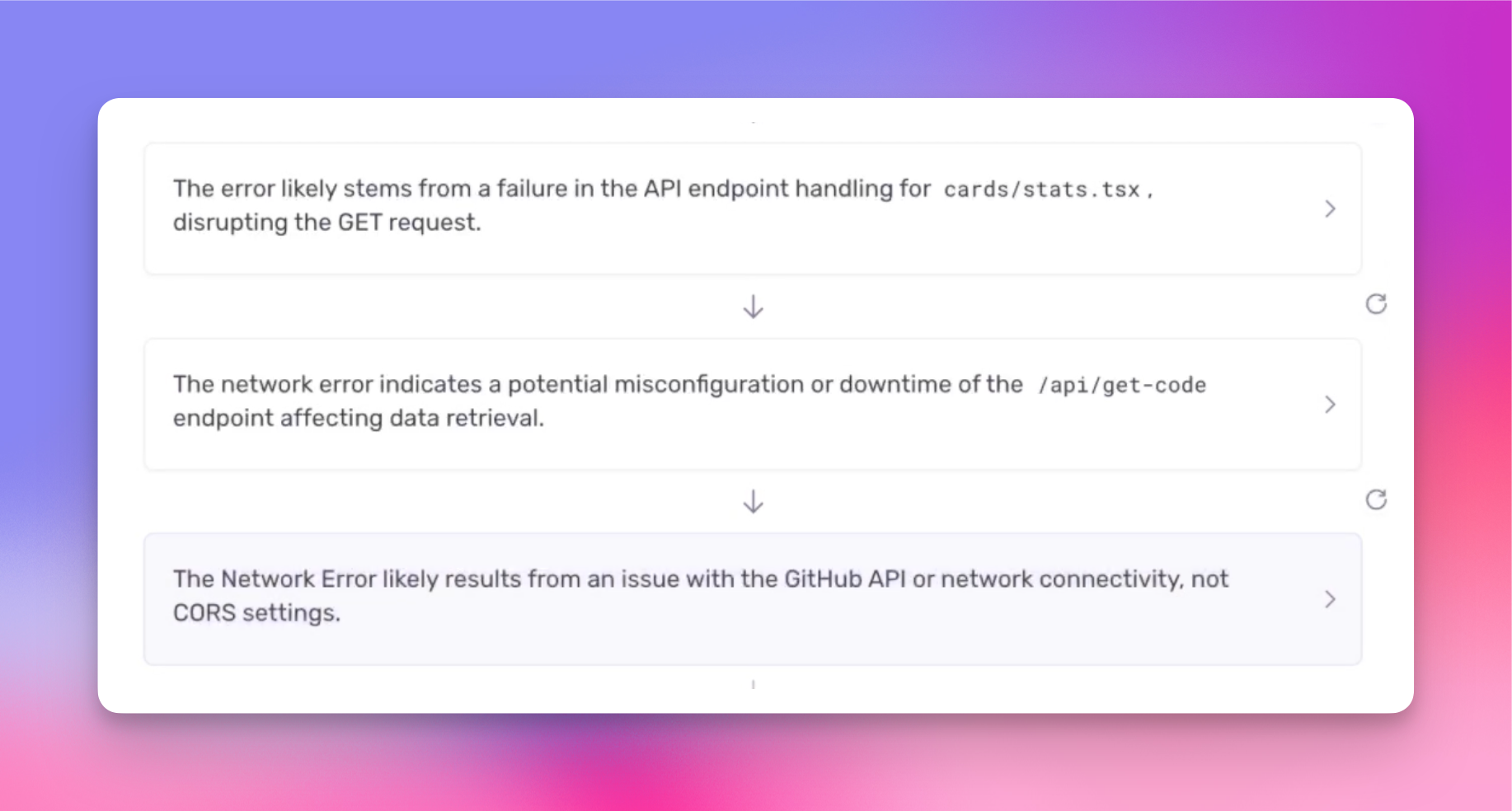

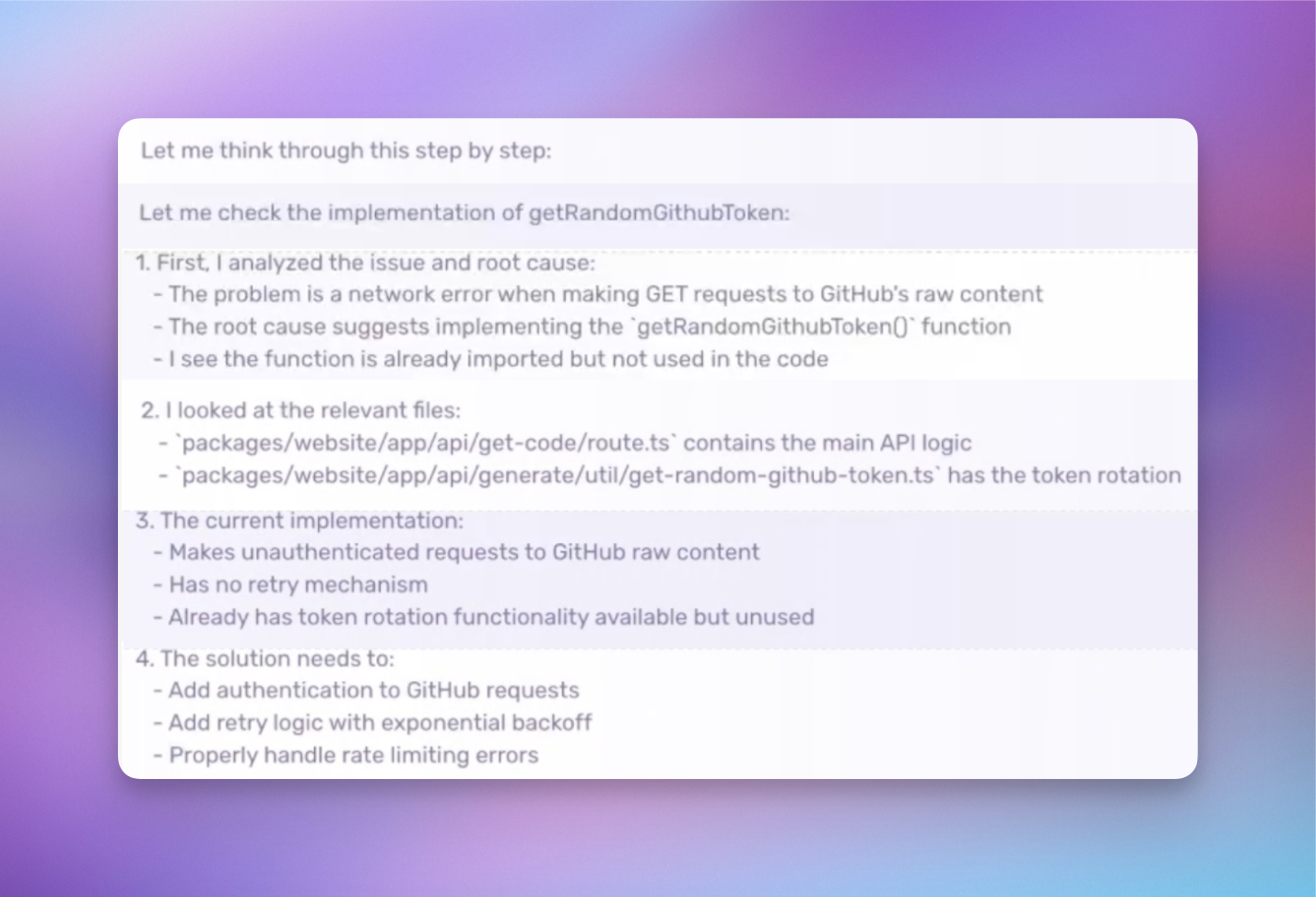

The first part of this does not require any user input. It seems like the AI assistant is in a feedback loop that tries to identify the root cause. It goes through a number of steps and adds the relevant source code to the context.

The fascinating part is by the end of it, it contradicts the initial superficial attempt to present a root cause and arrives at a much better conclusion. In fact, looking at this seems oddly… human. And somewhat scary.

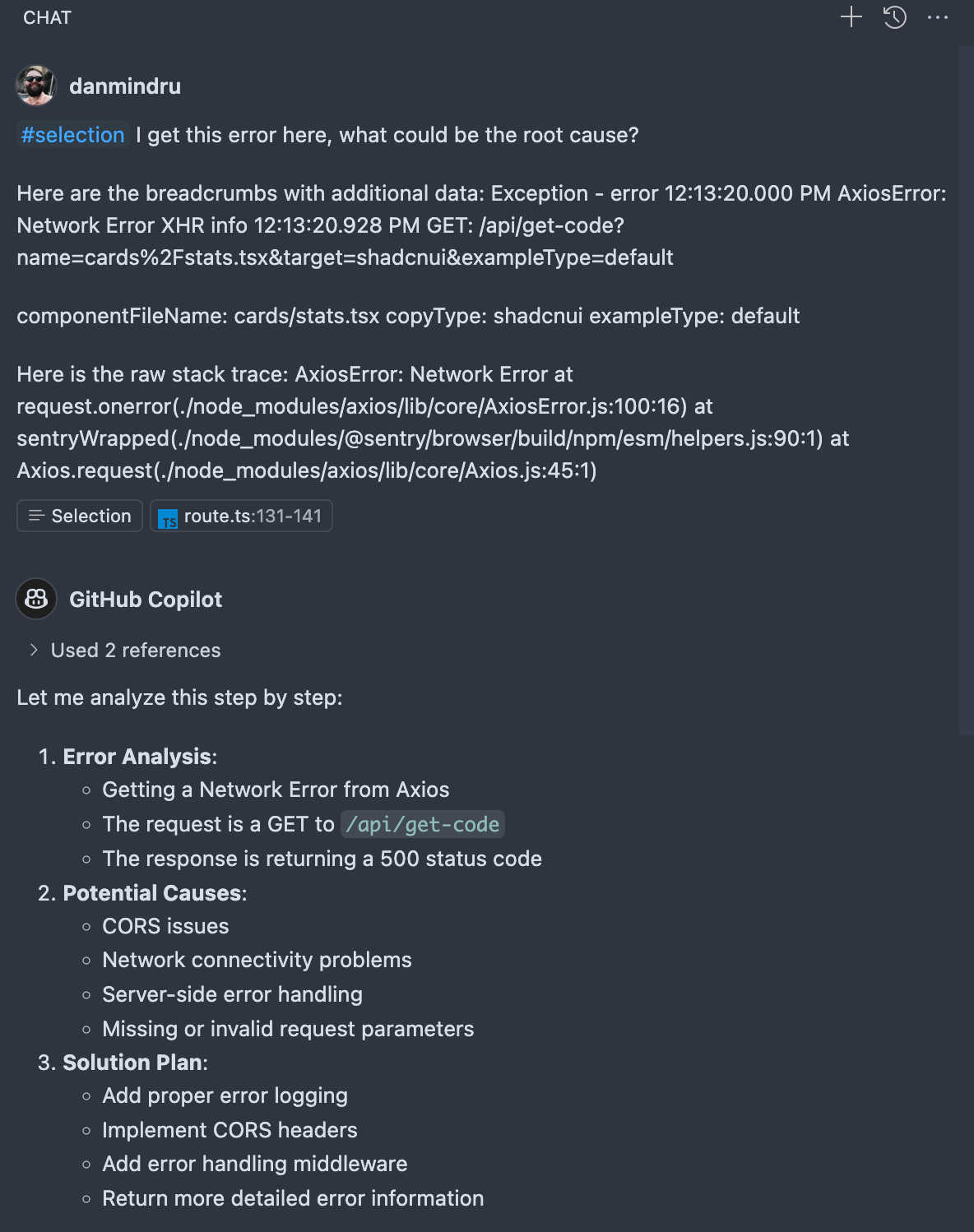

If you’ve ever used ChatGPT for these types of issues, you know this type of answer is hard to get to. Even with the new reasoning models, you often receive responses similar to the superficial root cause we saw initially. What’s even more impressive is that dev tools like GitHub Copilot or Cursor don’t get close to this even with the codebase context in place. Here’s Copilot with Claude 3.5 Sonnet:

As you can see, Copilot ballparks a few potential causes which miss the mark. It also proposes some code changes to add more logging and error handling, which won’t be of much help in this case. Obviously, tweaking this prompt and some human handholding will eventually yield the same results, however I am quite impressed by Autofix’s ability to get on track on its own.

But hold up ― it was just getting warmed up.

Finding a Fix With My New Best Friend

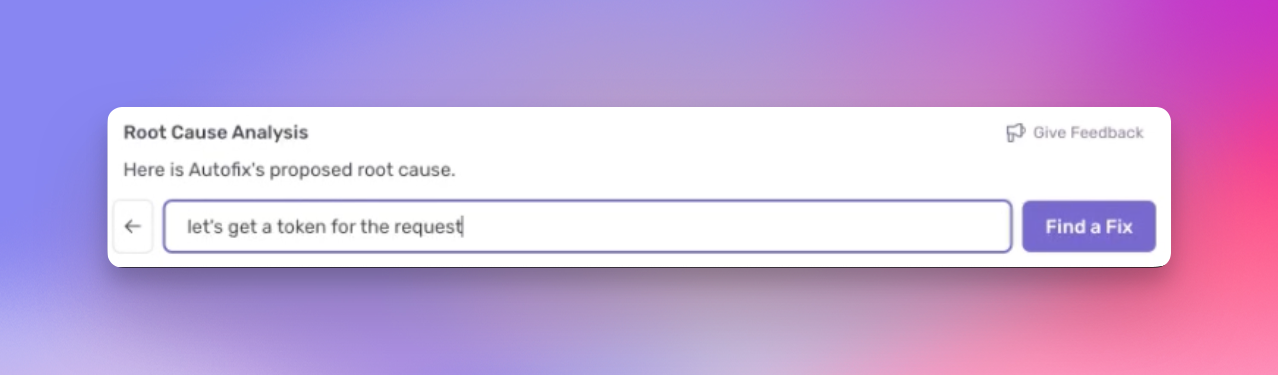

After finding a root cause, I get the opportunity to either use the suggested fix or input a proposal of my own. This is quite nifty, since I imagine this could help a human get out-of-the-box ideas in some cases. This is also where I realized how AI assistants can shine ― the ability to react to human input makes them exponentially more powerful vs trying to guess the perfect path. In this case though, the guess was good enough for me, so I just used the suggested root cause.

By pressing that I continued on a path to finding a fix.

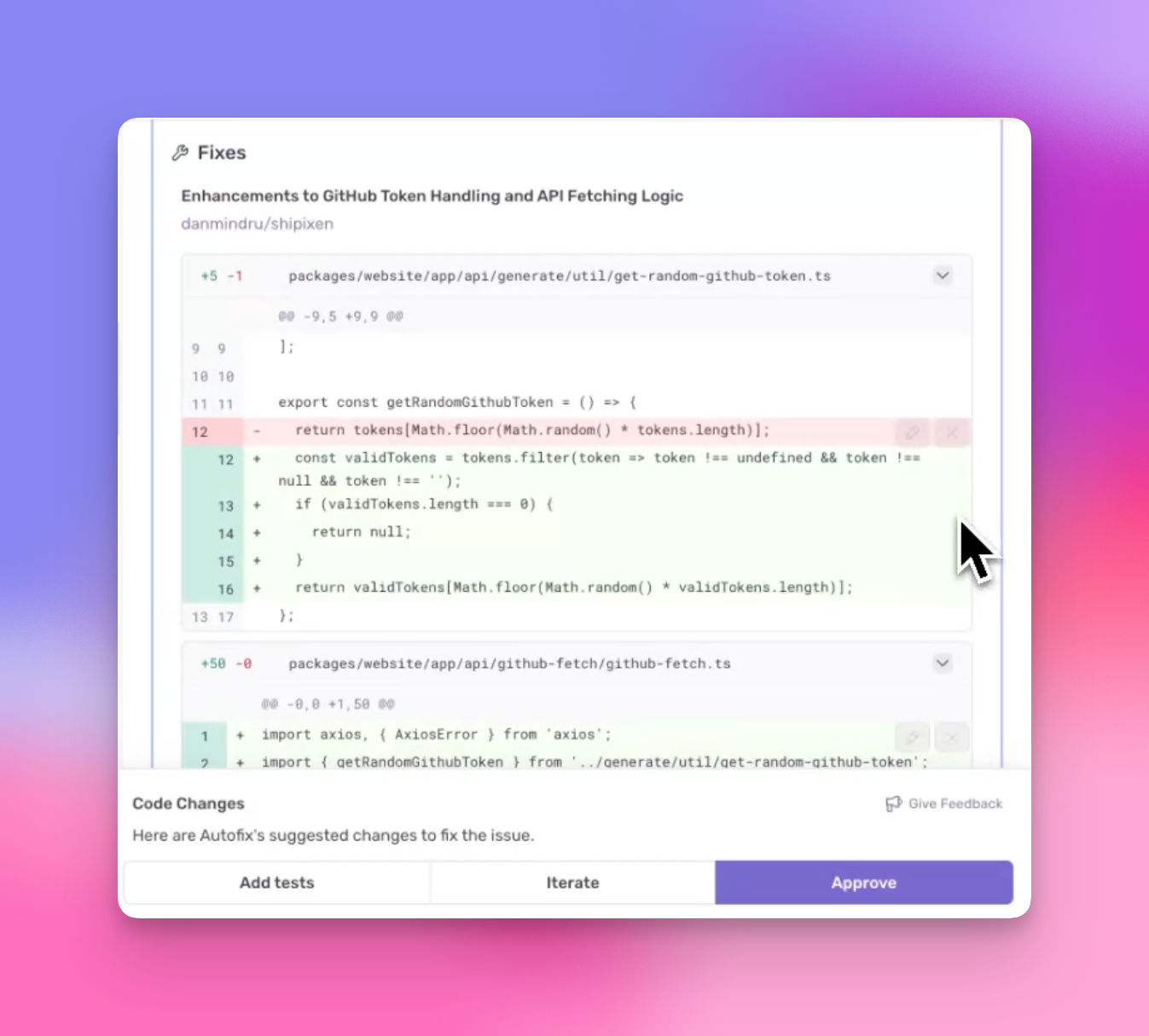

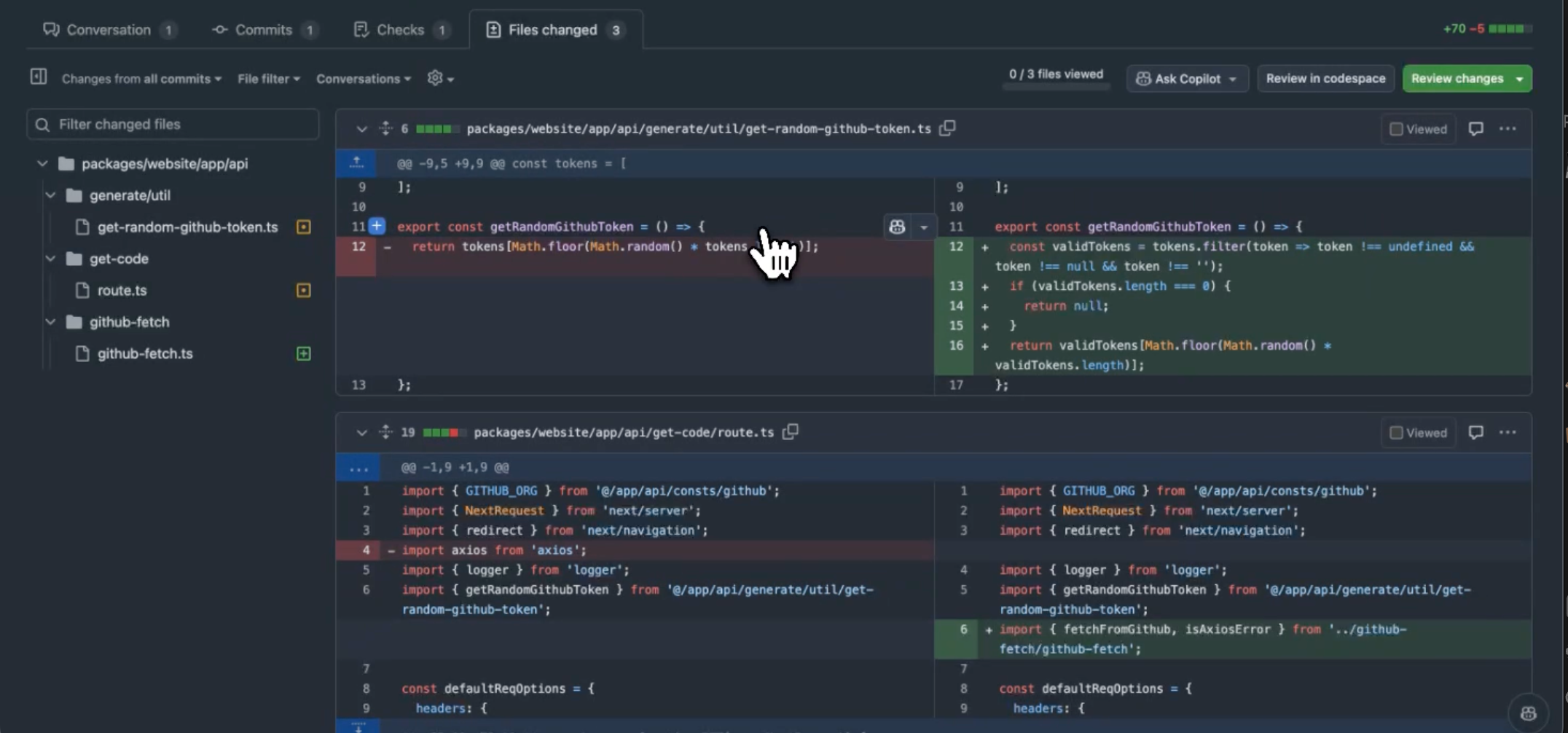

Once more, I got the opportunity to direct the assistant and this time around I accept the fix. By skimming through the code snippets it provided, I realize that the GitHub request I was making is not using any authorization or authentication of any kind. Obviously, that can get blocked or rate-limited and I don’t have much control over it. So here goes:

For those interested in the technical implementation, Sentry has made this open-source on GitHub.

# Behind the scenes prompts to determine the root cause, find the rest of the prompt code on GitHub class RootCauseAnalysisPrompts: @staticmethod def format_system_msg(): return textwrap.dedent( """\ You are an exceptional AI system that is amazing at researching bugs in codebases. You have tools to search a codebase to gather relevant information. Please use the tools as many times as you want to gather relevant information. # Guidelines: - Your job is to simply gather all information needed to understand what happened, not to propose fixes. - You are not able to search in external libraries. If the error is caused by an external library or the stacktrace only contains frames from external libraries, do not attempt to search in external libraries. It is important that you gather all information needed to understand what happened, from the entry point of the code to the error.""" )

At this point I’m already ready to admit I was wrong about AI assistants. They are a brilliant way to enhance current AI tech. But it would be fitting for Sentry to stop here. If I learned one thing is from using their platform is they either do the thing as if the world depends on it or they don’t do it at all. Just take their SDKs: you ask for one language, they pretty much offer all languages.

So the AI assistant continues on its own, figures out the required context, understands the existing implementation and makes a plan to fix it! That’s not all folks.

Creating the Implementation and Making A Pull Request

As you can now tell, whenever Autofix encountered uncertainty or gaps in understanding, it asked for my input instead of making assumptions. The same goes for writing the implementation (which by the way, is pretty darn good!).

There are 3 options here:

Approve

Iterate

Add tests (this is awesome)

At this point, the collaboration feels like working alongside a developer, only this junior probably was top of their class because it did great. Let me highlight some things that impressed me:

It extended existing code instead of writing new methods

It used the existing file structure

It actually wrote clean code with proper error handling

The proposed implementation worked first-try 🤯

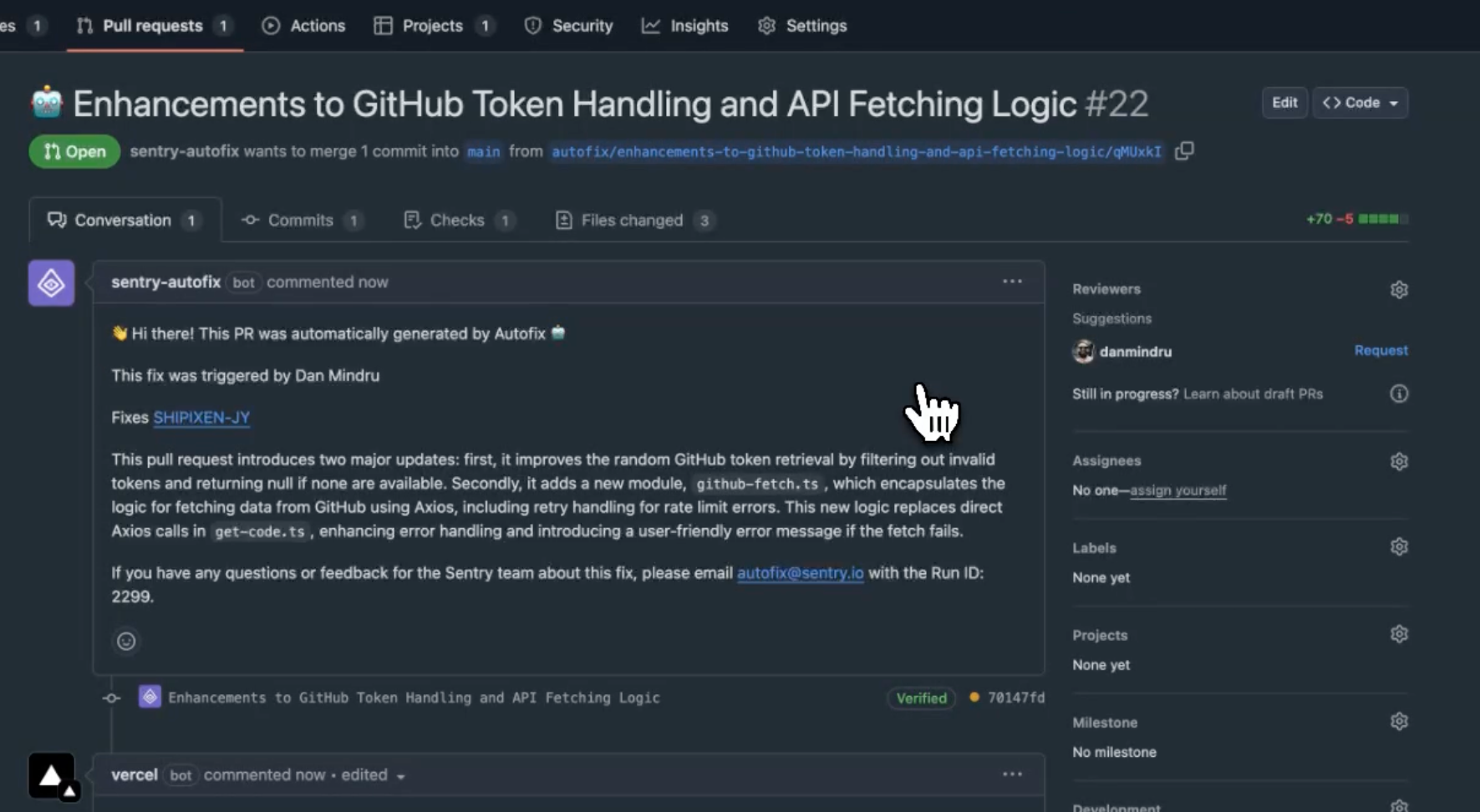

Now I am not sure how much this is on the assistant or just the fact that LLMs these days are getting quite good at writing the code, but at this point I had no doubts left. AI assistants are not just hype and they can not only enhance a good product, but create new product categories on their own. The cherry on top? You have the option to actually create a PR on GitHub!

And they even write a nice description and title for you.

How cool is that?

Final thoughts

At the end of this, I was in awe of the entire workflow. It felt like the future. So obviously, I ran to 𝕏 and bragged about it. Some products have an enormous opportunity to leverage AI assistants because of the context they hold. Sentry is absolutely one of them and now that I’ve tried their offerings ― I’m convinced.

I do understand it has some limitations and it might not always get things right. But the fact that it accepts human input convinced me that Autofix and AI assistants in general are not just hype, they are the next thing in AI. At least until DeepSeek releases their new model at least… probably sometime tomorrow.

P.S. Remember to check out the implementation to learn more. It’s open source on GitHub! You can opt in for AI Autofix and try it for yourself. I think you’ll be just as blown away as I was!