How we built code reviews that catch issues, not noise

How we built code reviews that catch issues, not noise

This post takes a closer look at how Sentry’s AI Code Review actually works.

As part of Seer, Sentry’s AI debugger, it uses Sentry context to accurately predict bugs. It runs automatically or on-demand, pointing out issues and suggesting fixes before you ship.

We know AI tools can be noisy, so this system focuses on finding real bugs in your actual changes—not spamming you with false positives and unhelpful style tips. By combining AI with your app’s Sentry data—how it runs and where it’s broken before—it helps you avoid shipping new bugs in the future.

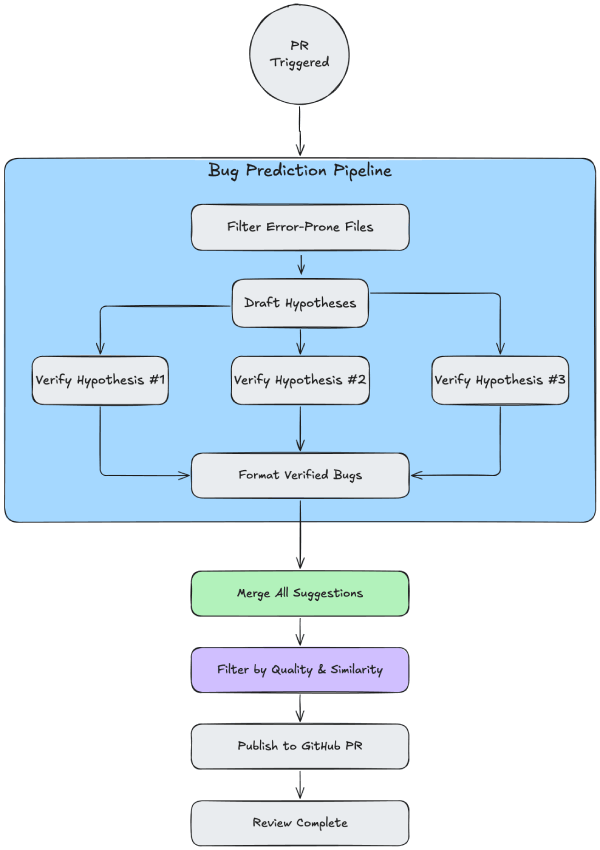

High-level architecture

The code review system detects bugs using both code analysis and Sentry data to deliver suggestions to your PR.

Here’s an overview of AI Code Review’s architecture:

Bug prediction pipeline

To predict bugs with as much precision as possible, we employ a multi-step pipeline based on hypothesis and verification:

Filtering - In this step we gather PR information and filter down PR files to the most error-prone. Especially important for large PRs;

Predicting - The exciting part. Here we run multiple agents that draft bug hypothesis and verify them;

Packaging & Shipping - Aggregate suggestions, filter and parse them into comments, then send them to your PR;

Later on, we will look at some traces from the getsentry/sentry repo that shows the pipeline in action.

Filtering

For PRs with only a few changes, we add all files into the agent’s context. But to prevent the agent from being overwhelmed by large changes, if more than five files will be changed, we narrow it down to most error-prone files.

This is done using an LLM that is instructed to drop testing files and doc changes, as well as files that superficially look less error-prone. That said, test files are searched during both draft and verify agent runs (in the step below).

Predicting

Because context is king, all agents have access to different tools that provide them with rich context to understand the code being analyzed.

Our predictions are ultimately based on:

Actual code change

PR description

Commit messages

Sentry historical data for this repository (see for details)

Code from the repository (via code-search tools)

Web information (via web-search tools)

“Memories” gathered for the repository across PRs. These are specific details about your repository that the system learns overtime with every PR it analyzes. We keep a list of up-to-date, relevant tidbits of information about the repository that is updated with every new PR analyzed. These are things like “tests for this repository use

pytestassertions” or “Theroot_taskintasks.pyhas a hard time limit of 15 minutes (900 seconds).”

The agent workflow generates hypotheses and attempts to verify each one.

Draft hypotheses: A drafting agent creates a report containing an initial analysis of potential bugs. This report is split into at most 3 bug hypotheses.

Verify hypothesis: Concurrently, each hypothesis is analyzed by a dedicated agent. Each verify agent has access to tools to fetch sentry issues and event details. See for details.

Gather results: A final agent gathers all the results and aggregates them into a list of verified bug predictions.

By focusing the verifying agents into a single hypothesis they can deep dive and more correctly assert if that is a valid bug or not.

That’s how we keep the signal-to-noise ratio high for bug predictions.

Example

Let’s look at another example trace for this PR in getsentry/sentry. Specifically for commit 596e046c05cb6ec43ebf924dbc40fd1e3f40db26.

File filtering

Received

- src/sentry/integrations/discord/webhooks/base.py

- src/sentry/integrations/discord/webhooks/message_component.py

- src/sentry/integrations/slack/unfurl/discover.py

- src/sentry/integrations/slack/webhooks/event.py

- src/sentry/rules/actions/integrations/base.py

- src/sentry/sentry_apps/installations.py

Selected

- src/sentry/rules/actions/integrations/base.py

- src/sentry/integrations/discord/webhooks/base.py

- src/sentry/sentry_apps/installations.py

- src/sentry/integrations/slack/webhooks/event.py

- src/sentry/integrations/discord/webhooks/message_component.pyDraft Hypothesis

Now I have a comprehensive understanding of the code changes. Let me analyze the potential issues:

Analysis

This code change is refactoring analytics.record() calls from using string-based event names to using class-based event objects. The repository context mentions that analytics.record() calls should be wrapped in try/except blocks to prevent failures from propagating, as analytics recording is considered non-critical.

Looking at the changes:

Most changes lack error handling: In files like

src/sentry/integrations/slack/webhooks/event.py,src/sentry/integrations/discord/webhooks/message_component.py,src/sentry/integrations/discord/webhooks/base.py, andsrc/sentry/sentry_apps/installations.py, the refactoring changes theanalytics.record()calls but doesn't add anytry/excepterror handling.Only one file adds proper error handling: In

src/sentry/rules/actions/integrations/base.py, the code properly wraps the newanalytics.record()call in atry/exceptblock withsentry_sdk.capture_exception(e).Missing imports: The new event classes are being imported, but I need to verify if these could fail during instantiation.

Potential Bugs

Based on my analysis, here are the potential bugs I've identified:

1. Missing error handling for analytics.record() calls

Location:

src/sentry/integrations/slack/webhooks/event.py:205~211

src/sentry/integrations/discord/webhooks/message_component.py:179~183

src/sentry/integrations/discord/webhooks/base.py:60~67

src/sentry/integrations/discord/webhooks/base.py:67~76

src/sentry/sentry_apps/installations.py:97~111

src/sentry/sentry_apps/installations.py:183~197

src/sentry/sentry_apps/installations.py:258~273Description: The repository context specifically states that analytics.record() calls should be wrapped in try/except blocks to prevent failures from propagating and causing user-facing errors. However, most of the refactored analytics.record() calls in this change do not include this error handling, while they're changing from string-based to class-based event recording.

The new class-based approach could potentially introduce new failure modes during event class instantiation that weren't present with the old string-based approach. For example, if the event class constructors perform validation on the input parameters or if there are issues with the class registration, these could raise exceptions that would now propagate up the call stack and potentially crash the request.

Important things to investigate further:

Whether the new event classes perform any validation during instantiation that could raise exceptions

Whether the old string-based

analytics.record()calls had any implicit error handling that's now missingWhether these analytics calls are in critical request paths where an exception would cause user-facing errors

2. Potential field mismatch in refactored analytics calls

Location: src/sentry/integrations/slack/webhooks/event.py:205~211

Description: The original analytics call included a user_id field implicitly, but the new IntegrationSlackChartUnfurl class shows user_id: int | None = None as an optional field. The refactored code doesn't pass user_id, so it will default to None. I need to check if this was intentional or if user_id should be passed from the request context.

Important things to investigate further:

Whether the original call was supposed to include a

user_idparameterWhether the missing

user_idfield affects analytics data collection in a meaningful way

Based on the repository context emphasizing that

analytics.record()calls should be wrapped intry/exceptblocks, and the fact that most of the changes in this PR don't include this protection, I consider the missing error handling to be the primary potential bug that could cause unexpected crashes in production.

You’ll notice that in what becomes hypothesis 1 the agent says “The repository context specifically states that analytics.record() calls should be wrapped in try/except blocks to prevent failures from propagating and causing user-facing errors”. This comes from the “memories” that we generated for the repository.

Memory context

The exact piece of memory passed to the agent:

When calling analytics.record() in API endpoints or other critical request paths, wrap the call in a try...except Exception as e: sentry_sdk.capture_exception(e). This ensures that failures in analytics event recording (e.g., due to invalid event data or internal analytics system issues) do not propagate and cause user-facing errors or block the main request flow. Analytics recording is considered non-critical for the primary user experience.

Analysis of Hypothesis 1

The initial analysis of the bug before evaluating it against the bug guidelines:<initial_followup>

Based on my thorough investigation of the codebase, I now have a clear understanding of the hypothesis and the evidence. Let me analyze this systematically:

Evidence Analysis

1. Repository Context Verification

The repository context correctly states that analytics.record() calls should be wrapped in try/except blocks. However, my investigation shows this guidance is inconsistently followed across the codebase.

2. Existing Error Handling Patterns

Looking at actual implementations:

Examples WITH proper error handling:

src/sentry/middleware/devtoolbar.py: Wrapsanalytics.record()intry/exceptwithsentry_sdk.capture_exception(e)src/sentry/rules/actions/integrations/base.py: The code change itself shows proper error handling foranalytics.record()callssrc/sentry/api/endpoints/organization_events_stats.py: Containsanalytics.record()wrapped intry/exceptwithsentry_sdk.capture_exception(e)specifically for agent monitoring queries

Examples WITHOUT error handling:

From my search results, I found hundreds of analytics.record() calls throughout the codebase that lack try/except protection, suggesting this is a widespread pattern, not an isolated issue.

3. Sentry Issues Evidence

The Sentry issues I examined show:

Issue 6871320642: A KeyError occurring during analytics event serialization, specifically in

SentryAppSchemaValidationError.serialize(). This demonstrates thatanalytics.record()can indeed fail with runtime exceptions.Issue 6847278399: A ValueError occurring when analytics event validation fails for missing required attributes in

TrialUsageEndNotification. This shows another way analytics events can throw exceptions.

Both of these issues show that the analytics system can fail in various ways, supporting the hypothesis that analytics.record() calls can raise exceptions.

4. New Class-Based Events Risk Assessment

Looking at the code change, the refactoring moves from string-based events to class-based events:

Old approach:

analytics.record(

"integrations.slack.chart_unfurl",

organization_id=organization.id,

unfurls_count=0,

)New approach:

analytics.record(

IntegrationSlackChartUnfurl(

organization_id=organization.id,

unfurls_count=0,

)

)The new approach introduces event class instantiation, which could potentially fail during:

Class constructor validation

Field validation

Dataclass transformation (as evidenced by the Sentry issues)

5. Critical Request Path Analysis

The files being modified include:

src/sentry/integrations/slack/webhooks/event.py // Webhook endpoints

src/sentry/integrations/discord/webhooks/ // Webhook endpoints

src/sentry/sentry_apps/installations.py // Installation managementThese are critical request paths where an unhandled exception would cause user-facing errors.

Bottom-Up Assessment

Evidence supporting the hypothesis:

✅ Repository context explicitly states

analytics.record()should be wrapped intry/catch✅ Real Sentry issues show

analytics.record()can fail with various exceptions✅ Only one of the modified files (

src/sentry/rules/actions/integrations/base.py) includes proper error handling✅ The other files lack error handling despite being in critical request paths

✅ The new class-based approach potentially introduces new failure modes

✅ Similar patterns in other parts of the codebase already include

try/catchforanalytics.record()

What I couldn't verify:

The exact likelihood of the new event classes failing during instantiation

Whether the specific event classes used in the code change have known validation issues

Conclusion

The hypothesis is VALID as a real bug. The evidence strongly supports that:

The repository explicitly requires

analytics.record()calls to be wrapped intry/exceptblocksReal production issues demonstrate that

analytics.record()can and does fail with various exceptionsThe code change violates this guideline in multiple critical request paths

The new class-based approach could introduce additional failure modes compared to the old string-based approach

This is a genuine bug that could cause unexpected crashes in production when analytics events fail to record. The fact that only one of the modified files (src/sentry/rules/actions/integrations/base.py) includes proper error handling while the others don't makes this a clear violation of the established pattern and could lead to user-facing errors in critical integration workflows.</initial_followup>

The revised followup findings after applying the bug guidelines:<revised_followup>

Looking at my analysis against the specific filtering guidelines for missing error handling:

Filtering Against Missing Error Handling Guidelines

1. Evidence that the block could raise an error: ✅ STRONG EVIDENCE

Two concrete Sentry issues (6871320642, 6847278399) demonstrate

analytics.record()failures withKeyErrorandValueErrorRepository context explicitly states analytics calls should be wrapped in

try/exceptMultiple existing examples in the codebase already wrap

analytics.record()intry/except

2. Graceful handling is the right thing to do: ✅ CONFIRMED

Repository context explicitly states analytics recording is "non-critical for the primary user experience"

Failures should not propagate to cause user-facing errors

Analytics is clearly intended to be non-blocking

3. Code execution context - end-user vs backend: ✅ USER-FACING CODE

src/sentry/integrations/slack/webhooks/event.py // Handles incoming Slack webhook events

src/sentry/integrations/discord/webhooks/ // Handles Discord webhook events

src/sentry/sentry_apps/installations.py // Handles Sentry App installationsThese are all user-facing integration endpoints where unhandled exceptions would:

Cause webhook failures visible to users

Break integration functionality

Return error responses to external services (Slack, Discord)

4. Tracing execution to bottom of stack: ✅ CONFIRMED

Webhook failures would propagate to external services

Installation failures would be visible to users in the UI

No higher-level error handling wraps these analytics calls

Revised Analysis

The hypothesis is VALID as a real bug. This meets all criteria for missing error handling:

Strong evidence of potential failures: Real production Sentry issues demonstrate

analytics.record()can failUser-facing code paths: These are integration endpoints where failures directly impact users

Repository guidelines violated: Explicit guidance to wrap

analytics.record()calls is not followedGraceful degradation required: Analytics failures should not break core functionality

The bug could cause:

Webhook integration failures for Slack/Discord users

Sentry App installation failures

Broken integration workflows

This is a genuine missing error handling bug in critical user-facing code paths.</revised_followup>

As part of evidence gathered by the agent to validate hypothesis 1 you’ll notice that it points to past Sentry issues in the code base. They follow from similar code patterns as the one the agent was analyzing.

Analysis of Hypothesis 2

The initial analysis of the bug before evaluating it against the bug guidelines:<initial_followup>

Based on my analysis, I can now provide a definitive assessment of the hypothesis. Let me examine the evidence:

Analysis of the Hypothesis

The hypothesis states that "The refactored analytics call for IntegrationSlackChartUnfurl does not pass a user_id, causing it to default to None. The original string-based call may have implicitly included this field, so its omission in the new class-based call could result in a loss of important analytics data."

Evidence from the Code

Looking at the exact code change in src/sentry/integrations/slack/webhooks/event.py:

Original code:

analytics.record(

"integrations.slack.chart_unfurl",

organization_id=organization.id,

unfurls_count=0,

)New code:

analytics.record(

IntegrationSlackChartUnfurl(

organization_id=organization.id,

unfurls_count=0,

)

)Key findings:

The original call did NOT include

user_id: The originalanalytics.record()call only passedorganization_idandunfurls_count=0. There was nouser_idparameter in the original call.The

IntegrationSlackChartUnfurlclass definition (fromsrc/sentry/integrations/slack/analytics.py):

@analytics.eventclass("integrations.slack.chart_unfurl")

class IntegrationSlackChartUnfurl(analytics.Event):

user_id: int | None = None

organization_id: int

unfurls_count: intContext where

user_idis available: In theon_link_sharedmethod where this analytics call occurs, there is access toslack_request.user_idandrequest.user. Throughout the file,slack_request.user_idis used extensively for logging and other purposes.Different usage in another file: In

src/sentry/integrations/slack/unfurl/discover.py, the sameIntegrationSlackChartUnfurlclass IS used with auser_id:

analytics.record(

IntegrationSlackChartUnfurl(

organization_id=first_org_integration.organization_id,

user_id=user.id if user else None,

unfurls_count=len(unfurls),

)

)Conclusion

The hypothesis is INVALID.

Here's why:

No implicit

user_idin original call: The fundamental assumption of the hypothesis—that the original string-based call "may have implicitly included" auser_idfield—is factually incorrect. The original call explicitly did NOT include anyuser_idparameter.Consistent behavior: Both the old and new implementations omit

user_id, so there's no change in behavior. The new call defaultinguser_idtoNoneis the same outcome as the original call not includinguser_idat all.Not a data loss: Since the original call never captured

user_id, there's no "loss of important analytics data" as the hypothesis suggests. The analytics were already missing this information.

While it's true that the refactored code could potentially include the user_id (since slack_request.user_id is available in the context), the absence of this field is not a bug introduced by the refactoring—it's a preservation of the existing behavior.

This is not a bug that would cause server crashes, major logical issues, or performance problems. At most, it could be considered a missed opportunity for enhanced analytics, but that would be a separate enhancement request, not a bug introduced by this refactoring.</initial_followup>

The revised followup findings after applying the bug guidelines:<revised_followup>

Since I concluded in my followup analysis that the predicted bug is not valid, I'm skipping this filtering step as instructed.

The hypothesis was invalid because the original analytics call never included a user_id parameter, so the refactored call maintaining this same behavior (with user_id defaulting to None) is not a bug - it's preserving the existing functionality without any data loss.</revised_followup>

In the end Hypothesis 1 is marked as a potential bug and showed to the user here. It includes reference to one of the Sentry issues used to validate the hypothesis.

How Sentry context is used

The verify agent is instructed to adopt a “click-into” flow for analyzing issues. First, it searches for a large set of issues, receiving a short summary of each. Next, if the agent believes a particular issue is relevant to the code change, it fetches details about that issue. This flow allows the agent to decide on its own whether it needs runtime knowledge to analyze the bug, and keeps its context focused.Here are the search tools the agent may call:

Fetch past Sentry Issues by keywords. This tool is akin to giving the agent the ability to search the Issue Feed in the Sentry UI.

Fetch past Sentry Issues by error type. This tool is useful when the agent can guess the bug’s specific error type (e.g., KeyError) and wants to see if similar bugs have occurred in the past. For example, is the code attempting to access data which—upon inspection of the object in production—is missing keys/attributes?

Fetch past Sentry Issues by file name and function name. This tool returns issues with an error whose stack trace overlapped with a specific function. The agent is instructed to use this tool to inspect variable values in production, or to determine if certain functions are known to be part of error-prone paths in the code.

Each of these searches returns, for each matching issue, the issue ID, title, message, code location, and (if it exists) Seer’s summary of the issue, which is based on the error event details and breadcrumbs.With this information, the agent can determine if any of the returned issues are relevant enough to warrant a closer look. If so, it can call a tool to fetch the event details, which includes the stack trace and variable values for that issue. This tool also returns (if it exists) Seer’s root cause analysis of the issue. At this point, the agent may incorporate this production/runtime context into its analysis. If it does, the bug prediction comment contains links to relevant Sentry issues.

Maintaining quality predictions

After we gather all suggestions, they go through an extra filtering stage to ensure you only receive suggestions that are relevant to you. We filter on a number of criteria, but the main ones are:

Confidence and severity

The agents that generate suggestions also provide an estimate of their confidence that the issue should be addressed and an assessment of its severity. The scale is 0.000 to 1.000, with 1.000 being a guaranteed production crash. Suggestions with low confidence or low severity are discarded.Similarity with past suggestions

In suggestion similarity we look at past suggestions for a repository and how that team reacted to them (by tracking 👍/👎 reactions in the comments). We use embeddings and cosine similarity (aka vector search) to filter out suggestions that are too similar to downvoted suggestions sent in the past. This empowers teams to select what kinds of suggestions they want to receive from the reviews.

Agent evaluation

To validate our system’s performance, we periodically run a set of known bugged and safe PRs through our bug predictor. We do this to evaluate performance and cost, prompt changes, model changes, new context and architectural changes. Each run gives us numbers for precision, recall, and accuracy that we've consistently improved over time. This also provides early signal if we're about to introduce regressions to the system. That said, while these metrics provide a useful directional signal, we recognize their limitations and avoid over-indexing on them.This controlled, consistent evaluation has been key to maintaining confidence in the system as it evolves. And while building this evaluation pipeline was costly upfront, it’s more than paid off in the reliability and trust we now have in the product.

Dataset collection

All datasets are stored in Langfuse. Each dataset item specifies an existing PR and what the expected bug(s) are (can also be no bug). Currently we have several datasets we run our evals against:

bug-prediction- the giant set of baseline tests. This was manually curated by the team when we initially started building the AI agent. Today it contains around 70 items. Typically we use this to report our scores (precision, recall, accuracy)bug-prediction-performance-issues- a dataset dedicated to performance issues, with the introduction of improving the agent to predict performance bugs we’ve introduced a set of PRs with performance issues.

Context Mocking

One of the core features of the bug prediction agent is the ability to analyze Sentry context therefore in the agent evaluations it is important to evaluate the qualities of it.

The simplest approach is to directly fetch Sentry context from the live Sentry API similar to how the production system would do it, however doing so would introduce three major problems in the context of evals:

The results from Sentry API between multiple runs would be inconsistent because new Sentry contexts (eg a new Sentry Issue was created) might be introduced or existing Sentry contexts might be updated between the first and second run.

Depending on the dataset item (ie the PR used for eval) and when the evaluations was ran there might be a chance that a leaking problem might be introduced, meaning information that should not have been available to this test was made available. An example of this would be if the PR being tested was able to fetch a Sentry Issue that was created as a result of that said PR. In production this would not be an issue because naturally the changes in the PR has not made it to the system yet when the agent is ran, however in our evaluation dataset the PRs we selected might have been merged already.

Querying from the live API is not very performant, the evaluations we currently have is aimed at understanding the quality of the predictions not so much the performance aspect of it (performance testing is separate from evaluations).

To solve for each of the problems above we have opted to use a “context mocking” solution where Sentry context are snapshotted, cached, and fetched locally during evaluation runs.

To snapshot existing DB of Sentry contexts we run a tool when a new dataset of PRs are created. This tool fetches all Sentry contexts up to the timestamp of the PRs ensuring nothing is fetched from timestamps after the creation of those PRs.

All the data is sanitized an indexed into a small SQLite file that is uploaded to our cloud storage to be downloaded at the start of each evaluation run.

When running the agent in evaluation mode, instead of fetching from live Sentry API, we mock those tool calls to these set of mocked tools that would fetch Sentry context directly from the local SQLite DB.

Where AI Code Review is going next

Like any software, we’re constantly working to make AI Code Review better and more useful. We continue to improve it through evaluations, investigating new context to add, changing prompts, and a lot more. We’re exploring more context sources to find the most impactful context, and working on making the predictions more actionable so you can fix issues as easily as the review brings them up.